Compositing Using Facebook Surround Data

CaraVR's Facebook Surround toolset allows you to quickly extract depth information to construct point clouds for use with depth-dependent workflows. The depth data is particularly useful for positioning 3D elements accurately and then rendering into 2D through C_RayRender.

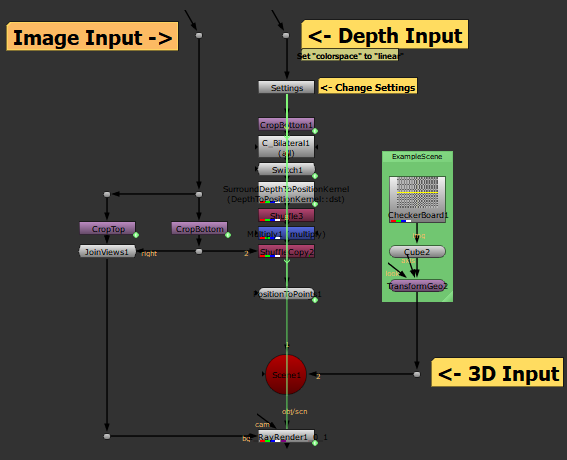

To set up the toolset:

| 1. | Navigate to CaraVR > Toolsets > Facebook_Surround_Depth_To_Points. |

The preset node tree is added to the Node Graph.

| 2. | Nuke prompts you to create left and right views, if they don't exist in your script. |

| 3. | Connect the source image to the Image Input and the depth information to the Depth Input. |

Note: Ensure that the Depth Input Read colorspace is set to linear.

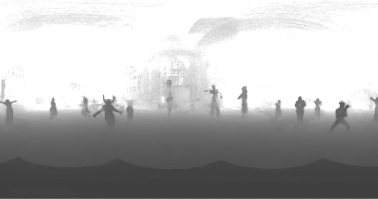

| 4. | Connect a Viewer to the Read node to view depth data. Darker areas are closer to the rig and lighter areas are farther away. |

In the example, the characters are in the foreground and the set is in the background.

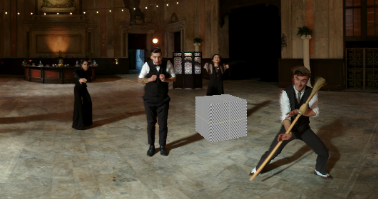

| 5. | Attach a Viewer to the C_RayRender node to view the scene. |

The depth data and camera data are then processed by a BlinkScript kernel, converted to a point cloud, and then passed into C_RayRender.

The default toolset adds a cube to the scene, using the injected data to position it accurately. You can swap out the example geometry to test other objects within the scene.

| 6. | You can also examine the scene in the 3D Viewer by pressing Tab. Navigate around the 360° scene using the standard Nuke navigation controls: Ctrl/Cmd to rotate the camera and Alt to pan. |

| 7. | Adjust the values in the Settings node to control the point cloud's appearance: |

• Eye Separation - determines how far apart the two views are (in meters), from a viewer's perspective. If you set the Eye Separation, or interpupillary distance (IPD), too low, objects in the scene appear crushed horizontally, but raising it too high can leave holes in the stitch.

• Point Details - controls the number of points in the point cloud. High values take longer to render.

• Cutoff - sets the depth in meters beyond which points are omitted from the point cloud. Reducing the value removes points farther from the rig.

• Filter Depth Map - when enabled, a bilateral filter is applied to the depth map in order to prevent stepping artifacts.

• ZInv Depth Encoding - when enabled, use ZInv depth encoding to reverse the way depth is represented in the image, providing smoother depth maps. The inversion treats smaller values in the image as greater depth, rather than the default where small values equal less depth.