MotionBlur

MotionBlur (NukeX and Nuke Studio only) uses the same techniques and technology as the motion blur found in Kronos to add realistic motion blur to a sequence, but presents the controls in a less complex, more user friendly way.

Inputs and Controls

|

Connection Type |

Connection Name |

Function |

|---|---|---|

|

Inputs |

FgVecs |

If the motion in your input sequence has been estimated before (for example, using FurnaceCore’s F_VectorGenerator or third-party software), you can supply one or more vector sequences to MotionBlur to save processing time. If you have separate vectors for the background and foreground, you should connect them to the appropriate inputs and supply the matte that was used to generate them to the Matte input. If you have a single set of vectors, you should connect it to the FgVecs input. |

|

BgVecs

|

||

|

Matte |

An optional matte of the foreground, which may improve the motion estimation by reducing the dragging of pixels that can occur between foreground and background objects. | |

|

Source |

The sequence to receive the motion blur effect. |

|

Control (UI) |

Knob (Scripting) |

Default Value |

Function |

|

MotionBlur Tab |

|||

|

Local GPU |

gpuName |

N/A |

Displays the GPU used for rendering when Use GPU if available is enabled. Local GPU displays Not available when: • Use CPU is selected as the default blink device in the Preferences. • no suitable GPU was found on your system. • it was not possible to create a context for processing on the selected GPU, such as when there is not enough free memory available on the GPU. You can select a different GPU, if available, by navigating to the Preferences and selecting an alternative from the default blink device dropdown. Note: Selecting a different GPU requires you to restart Nuke before the change takes effect. |

|

Use GPU if available |

useGPUIfAvailable |

enabled |

When enabled, rendering occurs on the Local GPU specified, if available, rather than the CPU. Note: Enabling this option with no local GPU allows the script to run on the GPU whenever the script is opened on a machine that does have a GPU available.

|

|

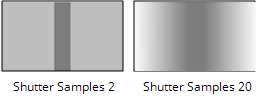

Shutter Samples |

shutterSamples |

3 |

Sets the number of in-between images used to create an output image during the shutter time. Increase this value for smoother motion blur, but note that it takes much longer to render.

|

|

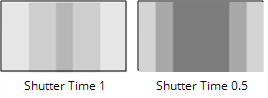

Shutter Time |

shutterTime |

0.75 |

Sets the equivalent shutter time of the retimed sequence. For example, a shutter time of 0.5 is equivalent to a 180 degree mechanical shutter, so at 24 frames per second the exposure time will be 1/48th of a second. Imagine a gray rectangle moving left to right horizontally across the screen. The figures below show how Shutter Time affects the retimed rectangle.

|

|

Method |

motionEstimation |

Dependent on script |

Sets the method of calculating motion estimation vectors: • Local - uses local block matching to estimate motion vectors. This method is faster to process, but can lead to artifacts in the output. • Regularized - uses semi-global motion estimation to produce more consistent vectors between regions. Note: Scripts loaded from previous versions of Nuke default to Local motion estimation for backward compatibility. Adding a new MotionBlur node to the Node Graph defaults the Method to Regularized motion estimation. |

|

Vector Detail |

vectorDetail |

0.2 |

Varies the density of the vector field. The larger vector detail is, the greater the processing time, but the more detailed the vectors should be. A value of 1 generates a vector at each pixel, whereas a value of 0.5 generates a vector at every other pixel. |

|

Resampling |

resampleType |

Bilinear |

Sets the type of resampling applied when retiming: • Bilinear - the default filter. Faster to process, but can produce poor results at higher zoom levels. You can use Bilinear to preview a motion blur before using one of the other resampling types to produce your output. • Lanczos4 and Lanczos6 - these filters are good for scaling down, and provide some image sharpening, but take longer to process. |

|

Matte Channel |

matteChannel |

None |

Where to get the (optional) foreground mask to use for motion estimation: • None - do not use a matte. • Source Alpha - use the alpha of the Source input. • Source Inverted Alpha - use the inverted alpha of the Source input. • Matte Luminance - use the luminance of the Matte input. • Matte Inverted Luminance - use the inverted luminance of the Matte input. • Matte Alpha - use the alpha of the Matte input. • Matte Inverted Alpha - use the inverted alpha of the Matte input. |