Compositing Using Google Jump Data

CaraVR's Google Jump toolset allows you to quickly extract depth information from Google Jump metadata to construct point clouds for use with depth-dependent workflows. The depth data is particularly useful for positioning 3D elements accurately and then rendering into 2D through RayRender.

To set up the toolset:

| 1. | Navigate to CaraVR > Toolsets > Google_Jump_Depth_To_Points. |

The preset node tree is added to the Node Graph.

| 2. | Nuke prompts you to create left and right views, if they don't exist in your script. |

| 3. | Connect the source image to the Image Input and the depth information to the Depth Input. |

Note: Ensure that the Depth Input Read colorspace is set to linear.

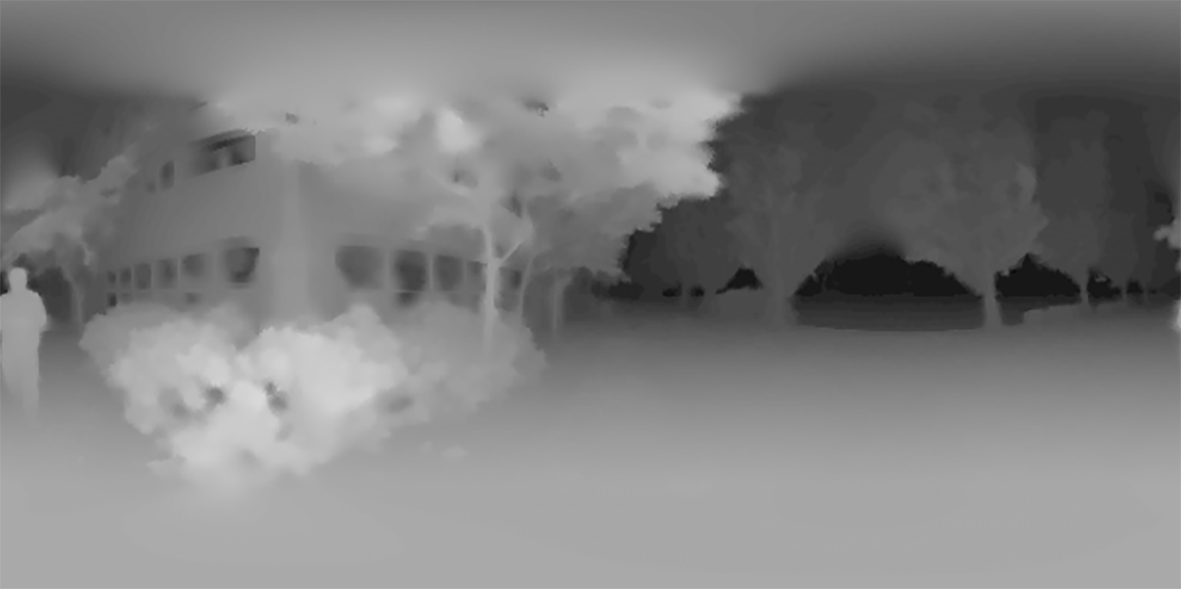

| 4. | Connect a Viewer to the Read node to view depth data. Brighter areas are closer to the rig and darker areas are farther away. |

In the example, the bushes to the left of the image are in the foreground and the trees to the right are in the background.

| 5. | Attach a Viewer to the RayRender node to view the scene. |

The toolset reads the camera metadata from the .json file produced by the rig using the custom GoogleJumpConfig node.

Note: If you update the metadata, click Load from Jump Metadata to update the script.

The depth data and camera data are then processed by a BlinkScript kernel, converted to a point cloud, and then passed into RayRender.

The default toolset adds a cube to the scene, using the injected data to position it accurately. You can swap out the example geometry to test other objects within the scene.

| 6. | You can also examine the scene in the 3D Viewer by pressing Tab. Navigate around the 360° scene using the standard Nuke navigation controls: Ctrl/Cmd to rotate the camera and Alt to pan. |