Using BigCat

We’ve begun extending the CopyCat feature set into a new node called BigCat.

When to Use BigCat?

BigCat builds on the same model network as CopyCat but introduces features designed for generalizing across larger datasets.

Use CopyCat when working with smaller datasets and focused tasks - like training a model for a single shot or a set of very similar shots. BigCat, on the other hand, is geared toward broader generalisation across hundreds or even thousands of images.

This approach is particularly effective when you can programmatically assemble a dataset from previous work - for example, reusing mattes from an earlier film when working on a sequel. It’s also well suited for projects that incorporate procedurally generated synthetic training data, as discussed in a previous Foundry Live session.

CopyCat generated .cat files are fully compatible with BigCat. If you’ve already trained a model for a specific shot using CopyCat and want to generalise it for other shots, you can use that model as a starting point. However, because such models are often overfitted to their original shots, you may get better results by training a new model from scratch with your larger dataset.

BigCat .cat files can be used with the CopyCat node. However if you continue training, using CopyCat the additional BigCat specific metadata such as the custom loss graph may be lost.

BigCat Features

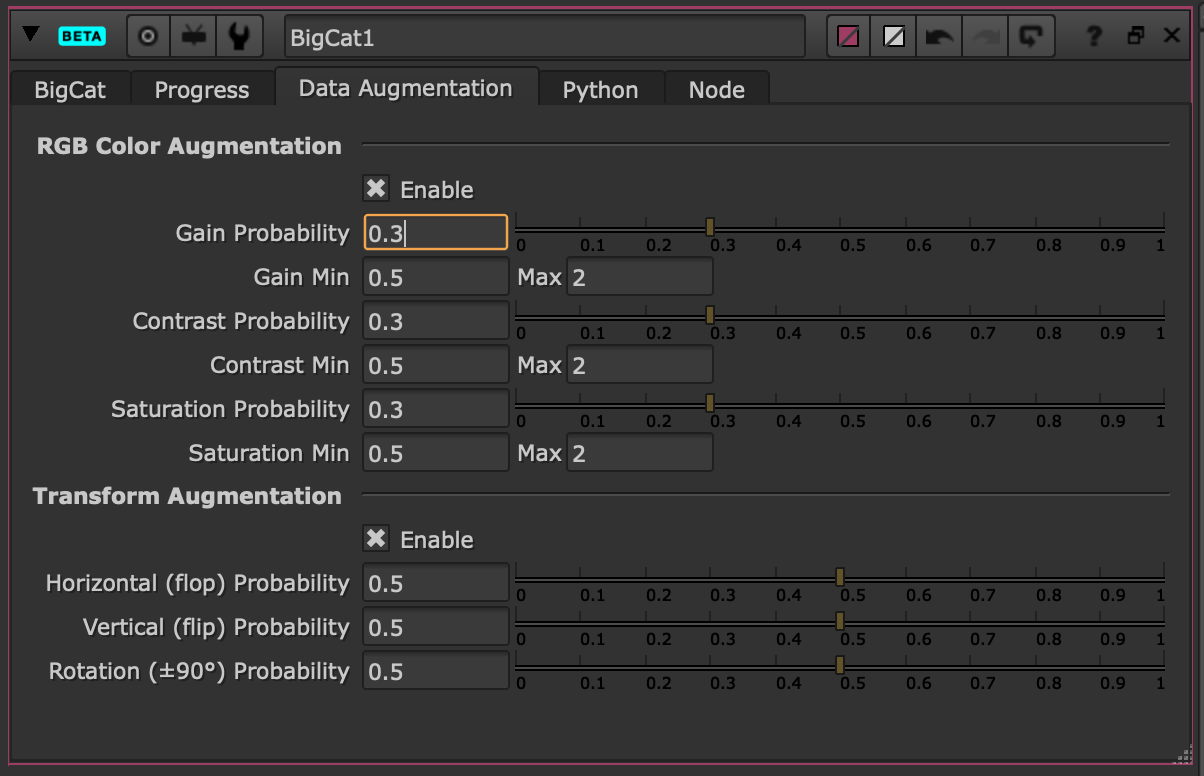

Automatic Data Augmentation

This tab introduces probabilistic grade and transform changes to help create extra variation in the dataset. The extra variation helps BigCat be more robust to different lighting conditions and movements within unseen frames/shots. However if you’re training BigCat for just a single shot, this additional variation in the dataset can hinder the training.

Right now the default probability is set to zero however in our use of this augmentation feature during the training of the CopyCat Initial Weights, we used a probability value of 0.5.

Note: These are the types of augmentation which we’ve used in training the CopyCat initial weights, there is scope to add further methods of augmentation in the future.

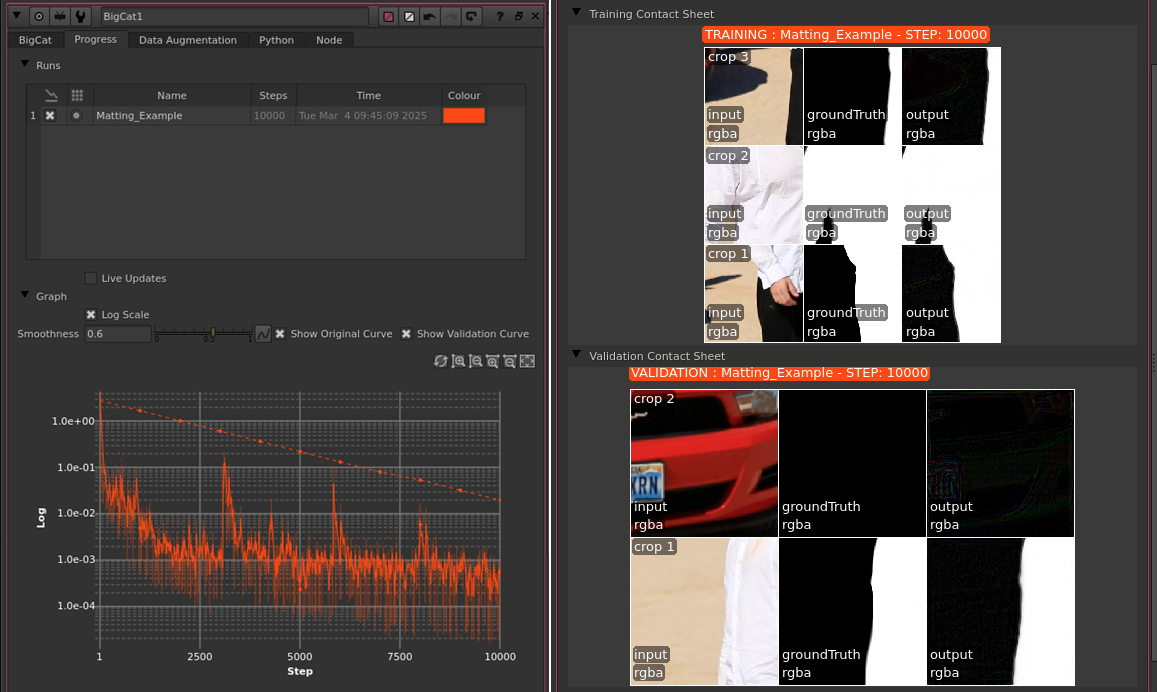

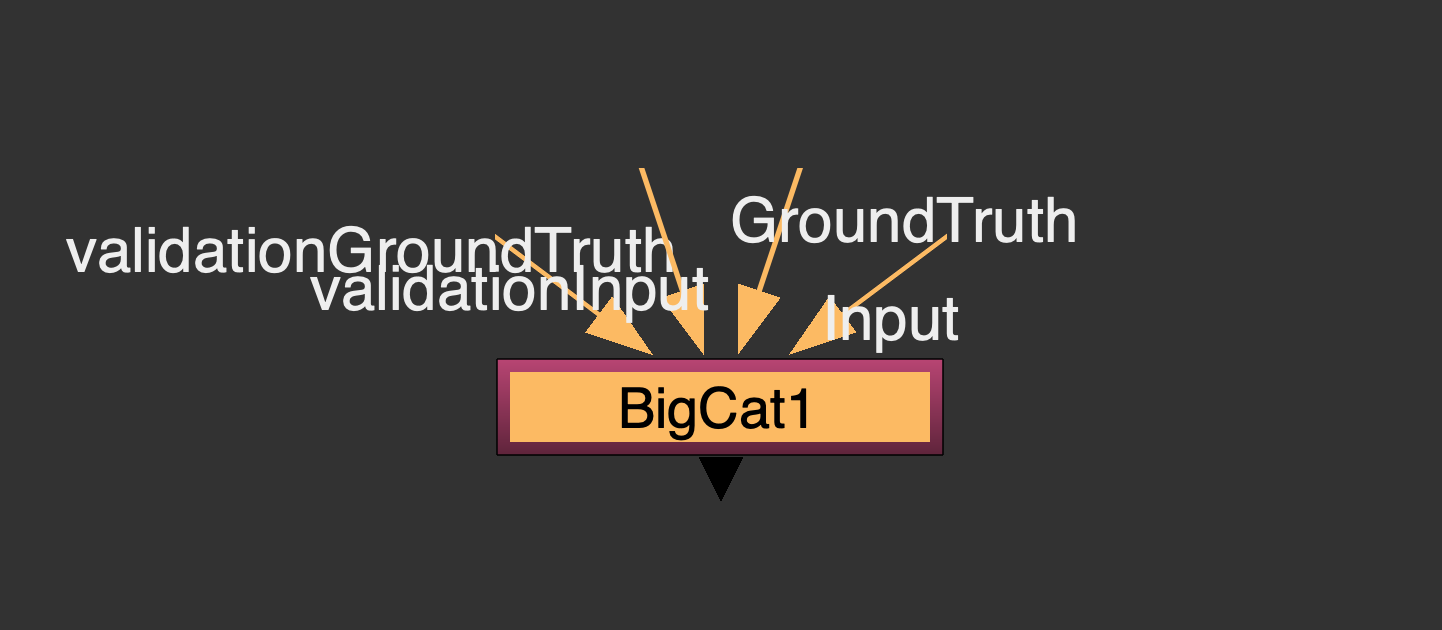

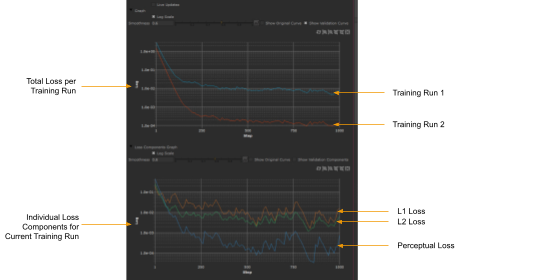

Data Validation

These optional new inputs to the node to allow you to feed in a validation dataset. These should be input/gt frames not included in the main dataset, which can be used to measure how well the model is performing on unseen data. The validation loss will be plotted alongside the training loss in the training graph. This helps when you want to prevent the model overfitting on the training dataset, so the model performs well across a wider range of shots. Validation loss is plotted as a dotted line and can be disabled using the Show Validation Curve knob.

Validation loss is calculated at the same interval as the checkpoints are written to disk, which can be adjusted in the advanced settings.

An additional contact sheet has been added to the progress tab, so that inference on the validation dataset can be monitored also.

The BigCat node has additional inputs for this validation dataset, which like the training inputs, also required an input and ground truth.

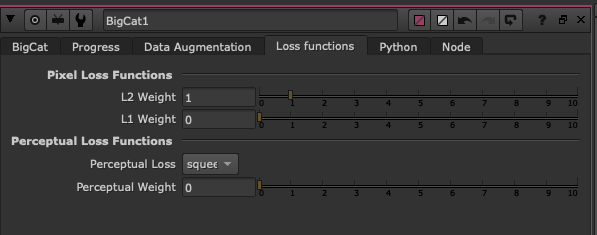

Custom Loss Functions

BigCat introduces control over loss functions used to guide the training process. In addition to the existing pixel based loss (MSE) we’ve added a perceptual loss (e.g., LPIPS), which should push BigCat to match structures and semantics rather than raw pixels. This should make the network less brittle to shot-to-shot variation and small misalignments, so a single model trained on diverse examples generalizes better than one trained with pixel losses alone

In summary, introducing a perceptual loss function to the training process:

• Learns what viewers care about. Perceptual losses compare high-level features instead of per-pixel differences.

• More tolerant to minor misregistration Pixel losses punish 1–2px shifts harshly.

Because a perceptual loss can “hallucinate” detail, it’s important to maintain a contribution from the pixel loss function. So on the ‘Custom Loss’ tab an L1 option as well as the current L2 (Mean square error). (Rule of thumb: L2 (MSE) punishes big errors more; L1 (MAE) is more robust to outliers).

Tracking & Balancing the Loss Functions

Each loss function will have a different range and depending on the scenario, you will likely want to adjust the contribution of each loss function. Additionally the loss output will change over time as the model gets better, the values for each loss function will change. Balancing the weighting of each could be tricky.

The loss values for each of the loss functions will be plotted on a second training graph on the progress tab called Loss Components Graph.

The contribution from each loss will be multiplied by the weighting and then summed together. When you're tuning the weightings, each type of loss nudges the model in a different direction. So if you're using something like an L1 loss, you're basically focusing on making the model try to minimize absolute differences, which can be a little more robust to outliers and give you kind of a slightly sharper result. If you're using an L2 loss, that's more like your mean squared error. It's going to really try to smooth things out, but it might be a little more sensitive to outliers. And then if you add a perceptual loss, like the LPIPS loss, that's more about making the image look good to the human eye. It's more about the perceptual quality rather than just the pixel difference.

Note: Perceptual loss functions are neural networks which generally have not been trained on commercially permissive datasets. We have chosen not to bundle these with Nuke by default - they will need to be downloaded and installed separately. Only if the cat files are present in the appropriate location within the Nuke install, will perceptual loss appear in the properties panel.