Improving Op Performance¶

Consider using a scalable memory allocator¶

A memory allocator receives requests from the user application for memory

(via malloc()) and responds with the address of a memory location that can

be used by the application. When the application has finished with the memory

it can return this memory to the allocator (via free()). Given the central

role memory plays in most applications the performance of the memory allocator

is of critical importance.

Most general purpose allocators that ship with operating system and the C Standard Library implementations can suffer from a number of pitfalls when used within highly multithreaded applications:

More requests to the operating system for blocks of memory than required. Each request to the operating system results in a system call which requires the CPU on which the request is made to switch to a higher privilege level and execute code in kernel mode. If an allocator makes more requests to the operating system to allocate memory than is required the application’s performance may suffer.

Use of concurrency primitives for mutual exclusion. Many general purpose allocators weren’t designed for use in multithreaded applications and to protect their critical sections, mutexes or other concurrency primitives are used to enforce correctness. When used in highly multithreaded applications the use of mutexes (either directly or indirectly) can negatively impact the application’s performance.

The general purpose allocator, used on most GNU/Linux operating systems is

ptmalloc2 or a variant of it. Whilst ptmalloc2 exhibits low memory overhead it

struggles to scale as requests are made from multiple threads. Therefore, if

profiling indicates your scene is spending a significant portion of time in

calls to malloc()/free() you may consider replacing the general purpose

allocator with a scalable alternative.

Internally Geolib3-MT makes uses a number of techniques to handle memory allocation including the judicious of jemalloc to satisfy some requests for memory in the critical path.

Summary¶

Multithreaded applications benefit from using a memory allocator that is thread aware and thread efficient. Geolib3-MT uses jemalloc internally, you are free to use an allocator of your choice provided the memory allocated by your plugins is also deallocated by the same allocator.

Mark custom Ops as thread-safe where possible¶

Geolib3-MT is designed to scale across multiple cores by cooking locations in

parallel. An Op may declare that it is not thread-safe by calling

Foundry::Katana::GeolibSetupInterface::setThreading(), in which case a

Global Execution Lock (GEL) must be acquired while cooking a location with the

Op. This prevents other thread-unsafe Ops from cooking locations, so is likely

to cause pockets of inefficiency during scene traversal. In profiling your

scenes, first identify and convert all thread unsafe Ops.

Thread-unsafe Ops can easily be identified from the Render Log: if a thread-unsafe Op is detected in the Op tree, a warning is issued:

[WARN plugins.Geolib3-MT.OpTree]: Op (<opName>: <opType>) is marked

ThreadModeGlobalUnsafe - this might degrade performance.

Summary¶

If an Op is not designed to run in a multithreaded context,

Foundry::Katana::GeolibSetupInterface::setThreading() can be used to

restrict processing to a single thread for that op type. Begin scene profiling

and optimization by identifying thread unsafe ops.

Mark custom Ops as collapsible where possible¶

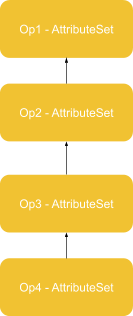

Real world scenes contain many instances of the following op chain construct,

This can arise for a number or reasons, given the versatility of the AttributeSet node, they are frequently used to “hotfix” scenes to ensure a given enabling attribute is present at render time. Or, the sequence of Ops can represent a set of logical steps to be taken during the creation of a given node graph. However, chains of similar Ops represent both a potential overhead and optimization opportunity for Geolib3-MT see (Place chains of collapsible Nodes/Ops together).

Scenes may take advantage of Geolib3-MTs ability to optimize the topology of

the Op tree. Custom Ops may indicate they can be collapsed by calling

Foundry::Katana::GeolibSetupInterface::setOpsCollapsible(). This function

takes an FnAttribute::StringAttribute as parameter, whose value indicates

the name of the attribute to which the collapsed Ops’ arguments will be passed.

For example, consider the chain of four AttributeSet ops above. Geolib3-MT

will collapse this chain of Ops by gathering the Op args for each Op into a

GroupAttribute called “batch”, whose children contain the Op args of each

Op in the chain.

- batch

Op1

Op2

Op3

Op4

Note: the Op args are passed to the collapsed Op in top down order.

Custom user Ops can participate in the Op chain collapsing functionality by

calling setOpsCollapsible() as described above. In the above example

AttributeSet calls setOpsCollapsible("batch") and then tests whether

it’s running in batch mode during its cook() call.

If participating in the Op chain collapsing system, the Op makes the firm

guarantee that the result of processing a collapsed chain of Ops must be

identical to processing each one in sequence. The implications of this mainly

relate to the querying of upstream locations or attributes where those

locations or attributes could have been produced or modified by the chain that

has been collapsed. As a concrete example, consider a two-Op chain in which the

first Op sets an attribute “hello=world” and the second Op in the chain prints

“Hi There!” if “hello” is equal to “world”. If the second Op uses

Interface::getAttr() to query the value of “hello” in the collapsed chain

the result will be empty, as hello has been set on the location’s output but

did not exist on the input. To remedy this, the op can be refactored to call

Interface::getOutputAttr() instead.

Summary¶

Participate in Op chain collapsing when Geolib3-MT identifies a series of Ops

of the same type in series. Use setOpsCollapsible() to indicate your

participation and refactor you Op’s code to handle the batch Op arguments.

Cache frequently accessed attributes¶

While access of an FnAttribute’s data is inexpensive, retention or release of an FnAttribute objects requires modifying a reference count. This will not typically be a problem however, this may accumulate for Ops that create many temporary or short lived FnAttribute objects, especially if many threads are executing the Op at once. In cases where an FnAttribute instance is frequently used, consider whether it may be cached to avoid this overhead.

As a concrete example, consider the following OpScript snippet:

local function generateChildren(count) for i=1,count do local name = Interface.GetAttr("data.name").getValue()..tostring(i) Interface.CreateChild(name) end end

This function creates count children, with names derived from the input attribute data.name. If count is large, accessing this attribute inside the loop causes unnecessary work for two reasons,

Lua must allocate and deallocate an object for the data.name attribute each iteration, causing more work for the Garbage Collector.

This allocation and destruction causes an increment and decrement in the attribute’s atomic reference count, potentially introducing stalls between threads.

The second bottleneck will apply when OpScripts accessing the same set of attributes are executed on many threads. To avoid accessing the reference count so frequently, this snippet may be rewritten as follows:

local function generateChildren(count) local stem = Interface.GetAttr("data.name").getValue() for i=1,count do local name = stem .. tostring(i) Interface.CreateChild(name) end end

Now data.name is accessed only once, outside of the loop, so the reference count is not touched while the loop executes.

Summary¶

Where possible, refactor OpScripts and C++ Ops to access attributes outside of tight loops. This prevents unnecessary modification of attribute reference counts, reducing the number of stalls when these Ops are executed on many threads.