Deep compositing is a way of compositing digital images using data in a different format to standard "flat" compositing. As the name suggests, deep compositing uses additional depth data. This reduces need for re-rendering, produces high image quality, and helps you solve problems with artifacts around the edges of objects.

A standard 2D image contains a single value for each channel of each pixel. In contrast, deep images contain multiple samples per pixel at varying depths and each sample contains per-pixel information such as color, opacity, and camera-relative depth.

For example, creating holdouts of objects that have moved in the scene has previously required re-rendering the background, and problems frequently occurred with transparent pixels and anti-aliasing. With Nuke’s Deep compositing node set, you can render the background once, and later move your objects to different places and depths, without having to re-render the background. Any transparent pixels, with motion blur for example, are also represented without flaw, so working with deep compositing is not only faster, but you also get higher image quality.

|

|

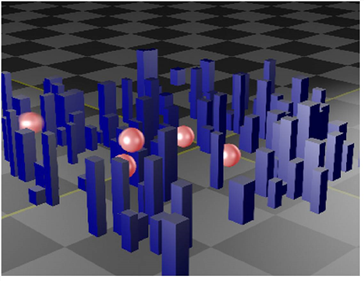

| Deep composite with ball objects among blue buildings. |

With Nuke’s deep compositing node set, you can:

• Read in your deep image with the DeepRead node. See Reading in Deep Footage.

• Merge deep data with the DeepMerge, see Merging Deep Images.

• Generate holdout mattes from a pair of deep images using the DeepHoldout node. See Creating Holdouts.

• Flatten deep images to regular 2D or create point clouds out of them. See Creating Deep Data.

• Sample information at a given pixel using the DeepSample node. See Sampling Deep Images.

• Crop, reformat, and transform deep images much in the same way as you would a regular image, using the DeepCrop, DeepReformat and DeepTransform nodes. See Cropping, Reformatting, and Transforming Deep Images.

• Create deep images using the DeepFromFrames, DeepFromImage, DeepRecolor, and ScanlineRender nodes. See Creating Deep Data.