Device Assignment

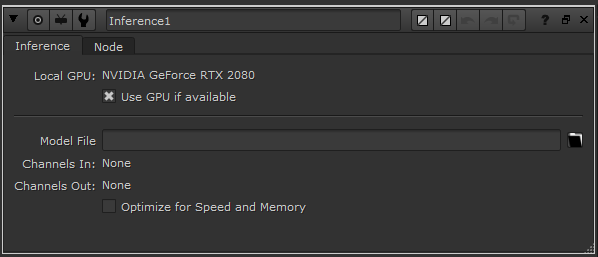

In the Inference node’s properties panel you may have noticed that there is a Use GPU if available checkbox. When this box is ticked, the model and the input tensor to the forward() function will be transferred to the GPU for processing. If unticked, they will be transferred to the CPU for processing.

In order for our .cat file to function correctly with this knob, we need to make sure that any other tensors defined in our model’s forward() function are also on the correct device.

TorchScript

Using our Cifar10ResNet example, we can edit the following lines of the forward() function to ensure that the output tensors will be created on the same device as the input tensor:

def forward(self, input):

"""

:param input: A torch.Tensor of size 1 x 3 x H x W representing the input image

:return: A torch.Tensor of size 1 x 1 x H x W of zeros or ones

"""

# Here we find out which device the input tensor is on, and assign our device accordingly

if(input.is_cuda):

device = torch.device('cuda')

else:

device = torch.device('cpu')

modelOutput = self.model.forward(input)

modelLabel = int(torch.argmax(modelOutput[0]))

plane = 0

if modelLabel == plane:

output = torch.ones(1, 1, input.shape[2], input.shape[3], device = device)

else:

output = torch.zeros(1, 1, input.shape[2], input.shape[3], device = device)

return output

This device variable should be used for all tensors created in the forward function to ensure they are created on the correct device. Having added these changes, we can create the .pt and .cat files as before.

Inference

Try toggling on and off the Use GPU if available checkbox in the Inference node to verify that the model and tensors are being transferred correctly to the given device. You should notice a large difference in computational time between the CPU and GPU.

Note

Inference currently only supports single GPU usage and not multi-GPU.