Tensor Sizes

When writing your model in PyTorch, be aware that when this model is run inside Nuke, the input tensor to your model forward function will be of size 1 x inChan x inH x inW, where inH and inW are the height and width of the image passed to the Inference node.

Similarly, the output tensor that is returned from your model forward function must be of size 1 x outChan x outH x outW, where outH and outW are the height and width of the image output from the Inference node. inChan and outChan are the number of channels you have defined in the Channels In and Channels Out knobs in the CatFileCreator respectively.

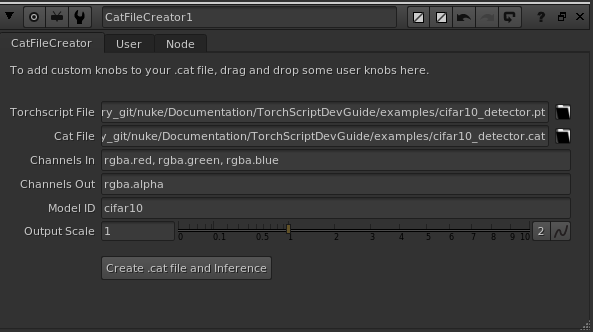

For example, in our simple Cifar10ResNet example, the Cifar10ResNet model processed an RGB image and returned a single channel output image of ones/zeros. We set up our CatFileCreator node as follows:

When this model is run inside Nuke, the shape of the input tensor passed to the forward function:

def forward(self, input):

"""

:param input: A torch.Tensor of size 1 x 3 x H x W representing the input image

:return: A torch.Tensor of size 1 x 1 x H x W of zeros or ones

"""

modelOutput = self.model.forward(input)

modelLabel = int(torch.argmax(modelOutput[0]))

plane = 0

if modelLabel == plane:

output = torch.ones(1, 1, input.shape[2], input.shape[3])

else:

output = torch.zeros(1, 1, input.shape[2], input.shape[3])

return output

will be of size 1 x 3 x H x W and the output tensor returned from the forward function must be of size 1 x 1 x H x W.

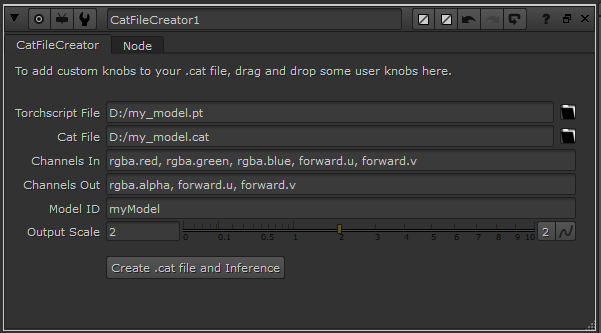

If we’re using a model that processes 5 channels and outputs 3 channels, and whose Output Scale value is 2:

When this model is run inside Nuke, the input tensor to the model forward function will be of size 1 x 5 x inH x inW and its output tensor must be of size 1 x 3 x 2*inH x 2*inW.