Full And Half Precision

When creating tensors in our model, we will need to make sure that they work correctly with the Optimize for Speed and Memory checkbox. When this box is ticked, the model and input tensor to the model are converted to half precision. Therefore, any other tensors defined in the model forward function will also need to be half precision. This can be done simply by assigning any tensors defined in the forward function the same dtype as the input tensor.

TorchScript

Using our Cifar10ResNet example, we can edit the following lines to the forward function to ensure that the output tensor has the same dtype as the input tensor:

def forward(self, input):

"""

:param input: A torch.Tensor of size 1 x 3 x H x W representing the input image

:return: A torch.Tensor of size 1 x 1 x H x W of zeros or ones

"""

modelOutput = self.model.forward(input)

modelLabel = int(torch.argmax(modelOutput[0]))

plane = 0

if modelLabel == plane:

output = torch.ones(1, 1, input.shape[2], input.shape[3], dtype=input.dtype)

else:

output = torch.zeros(1, 1, input.shape[2], input.shape[3], dtype=input.dtype)

return output

All tensors created in the model should be set up in this way to ensure they have the correct dtype. Having added these changes to the forward function, we can create the .pt and .cat files as before.

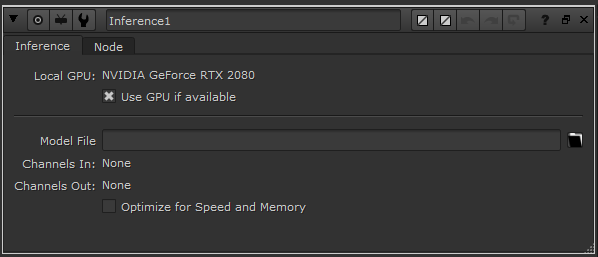

Inference

Try toggling on and off the Optimize for Speed and Memory checkbox in the Inference node to verify that the model and tensors are all being converted to the correct dtype.